Biography

Ju Xu graduated from Peking University (PKU). He is now an algorithm engineer at Alibaba, focusing on recommender systems. He has published several papers on NeurIPS, AAAI, WWW, MICCAI, TPAMI and so on. When he was a undergraduate at Renmin University of China, he focus on data mining.

Timeline

Publications

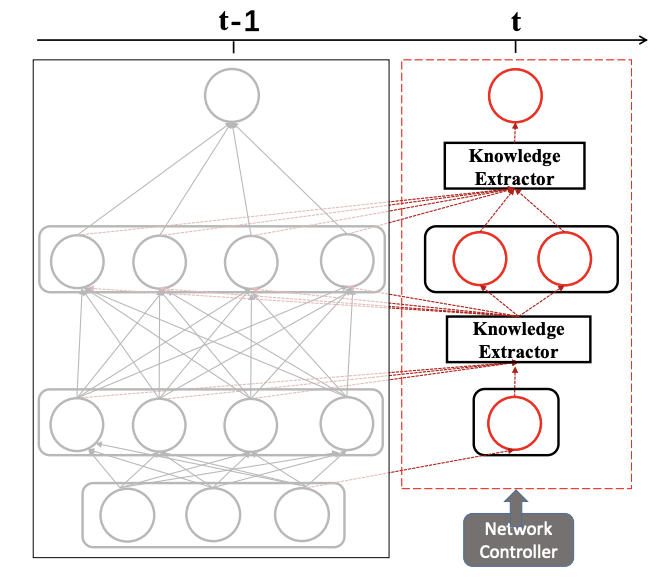

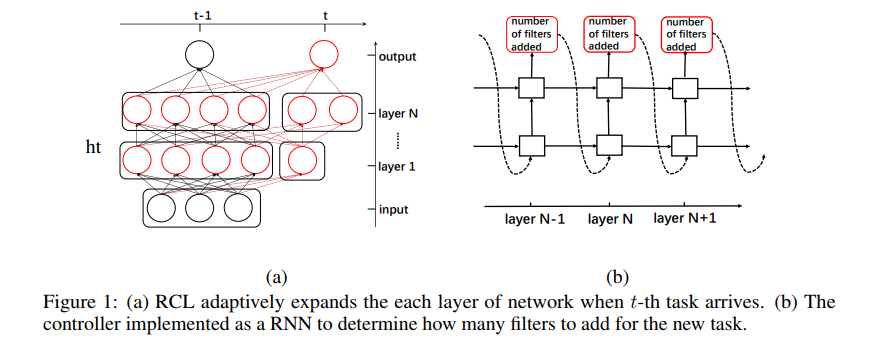

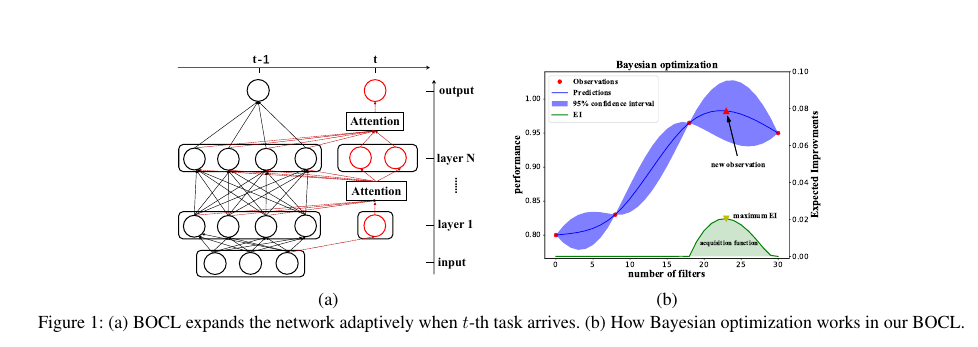

In this work, we propose a new adaptive progressive network framework including two models for continual learning: Reinforced Continual Learning (RCL) and Bayesian Optimized Continual Learning with Attention mechanism (BOCL) to solve this fundamental issue.

*: Equal contribution

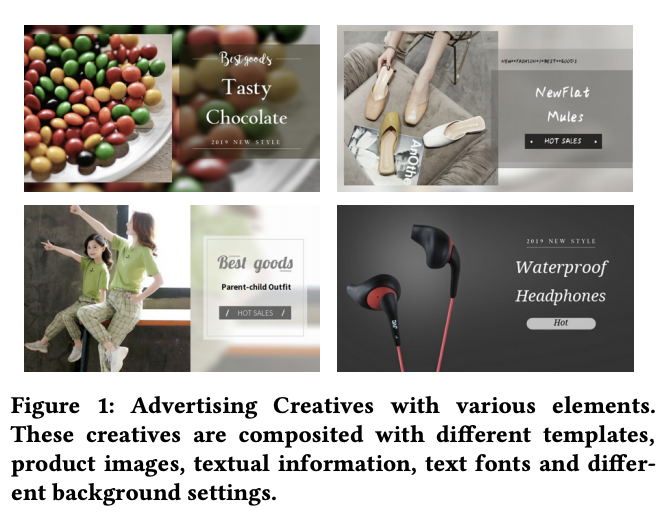

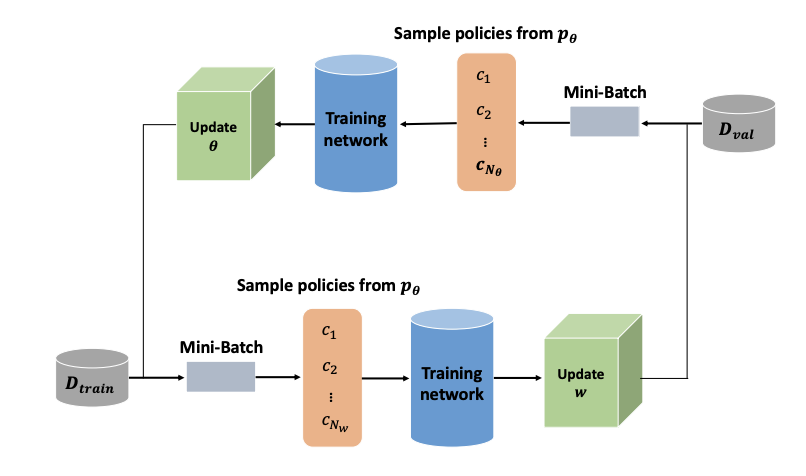

we propose an Automated Creative Optimization (AutoCO) framework to model complex interaction between creative elements and to balance between exploration and exploitation. Specifically, motivated by AutoML, we propose one-shot search algorithms for searching effective interaction functions between elements.

In this paper, we propose an automatic data augmentation framework (ASNG) through searching the optimal augmentation policy, particularly for 3D medical image segmentation tasks. It’s the first automatic data augmentation work in whole semantic segmentation filed.

*: Equal contribution

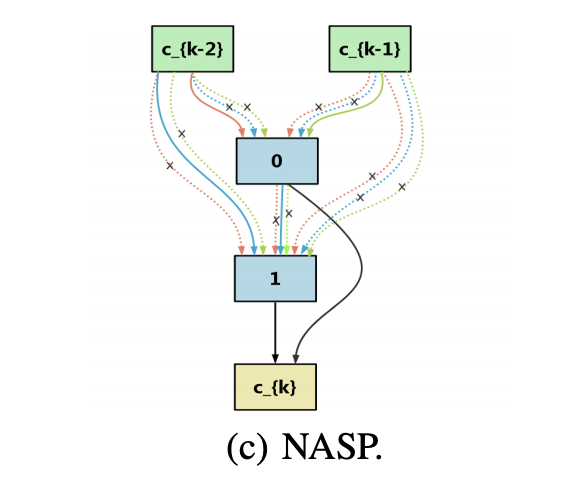

In this paper, we propose a new differentiable Neural Architecture Search method based on Proximal gradient descent (denoted as NASP). Different from DARTS, NASP reformulates the search process as an optimization problem with a constraint that only one operation is allowed to be updated during forward and backward propagation. Experiments on various tasks demonstrate that NASP can obtain high-performance architectures with 10 times of speedup on the computational time than DARTS.

*: Equal contribution

Most artificial intelligence models have limiting ability to solve new tasks faster, without forgetting previously acquired knowledge. The recently emerging paradigm of continual learning aims to solve this issue, in which the model learns various tasks in a sequential fashion. In this work, a novel approach for continual learning is proposed, which searches for the best neural architecture for each coming task via sophisticatedly designed reinforcement learning strategies. We name it as Reinforced Continual Learning. Our method not only has good performance on preventing catastrophic forgetting but also fits new tasks well. The experiments on sequential classification tasks for variants of MNIST and CIFAR-100 datasets demonstrate that the proposed approach outperforms existing continual learning alternatives for deep networks.

Though neural networks have achieved much progress in various applications, it is still highly challenging for them to learn from a continuous stream of tasks without forgetting. Continual learning, a new learning paradigm, aims to solve this issue. In this work, we propose a new model for continual learning, called Bayesian Optimized Continual Learning with Attention Mechanism (BOCL) that dynamically expands the network capacity upon the arrival of new tasks by Bayesian optimization and selectively utilizes previous knowledge (e.g. feature maps of previous tasks) via attention mechanism. Our experiments on variants of MNIST and CIFAR-100 demonstrate that our methods outperform the state-of-the-art in preventing catastrophic forgetting and fitting new tasks better.

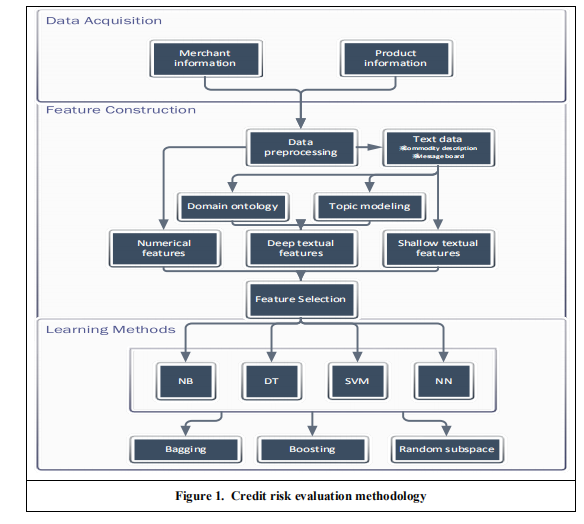

The credit score of second-hand merchants is very important for the purchaser, and accurate merchant credit scores can reduce fraud and optimize the user experience. This article supports the evaluation of the merchant’s reputation by using the topic model to extract the merchant characteristics and establish a machine learning model to score the reputation of the second-hand merchant.